Large language models (LLMs) with memory are computationally universal. However, mainstream LLMs are not taking full advantage of memory, and the designs are heavily influenced by biological brains. Due to their approximate nature and proneness to the accumulation of errors, conventional neural memory mechanisms cannot support LLMs to simulate complex reasoning. In this paper, we seek inspiration from modern computer architectures to augment LLMs with symbolic memory for complex multi-hop reasoning. Such a symbolic memory framework is instantiated as an LLM and a set of SQL databases, where the LLM generates SQL instructions to manipulate the SQL databases. We validate the effectiveness of the proposed memory framework on a synthetic dataset requiring complex reasoning.

While large language models get more capable in language organization and knowledge reasoning, one of their main issues is handling long contexts, e.g., GPT-4 works with 32K sequence length, and Claude works with 100K. This is a practical issue while chaining LLMs into software applications for daily and industrial applications: as a personal chatbot, it forgets about your preferences, as every day is a new day for an LLM; as a business analytic tool, it can only process data captured within a small time-window, as it fails to digest the long historical business documents. Due to the distributed knowledge storage within neural networks, maintaining and manipulating neural knowledge precisely and symbolically is difficult. In other words, a neural network's learning and updating process is prone to error accumulation.

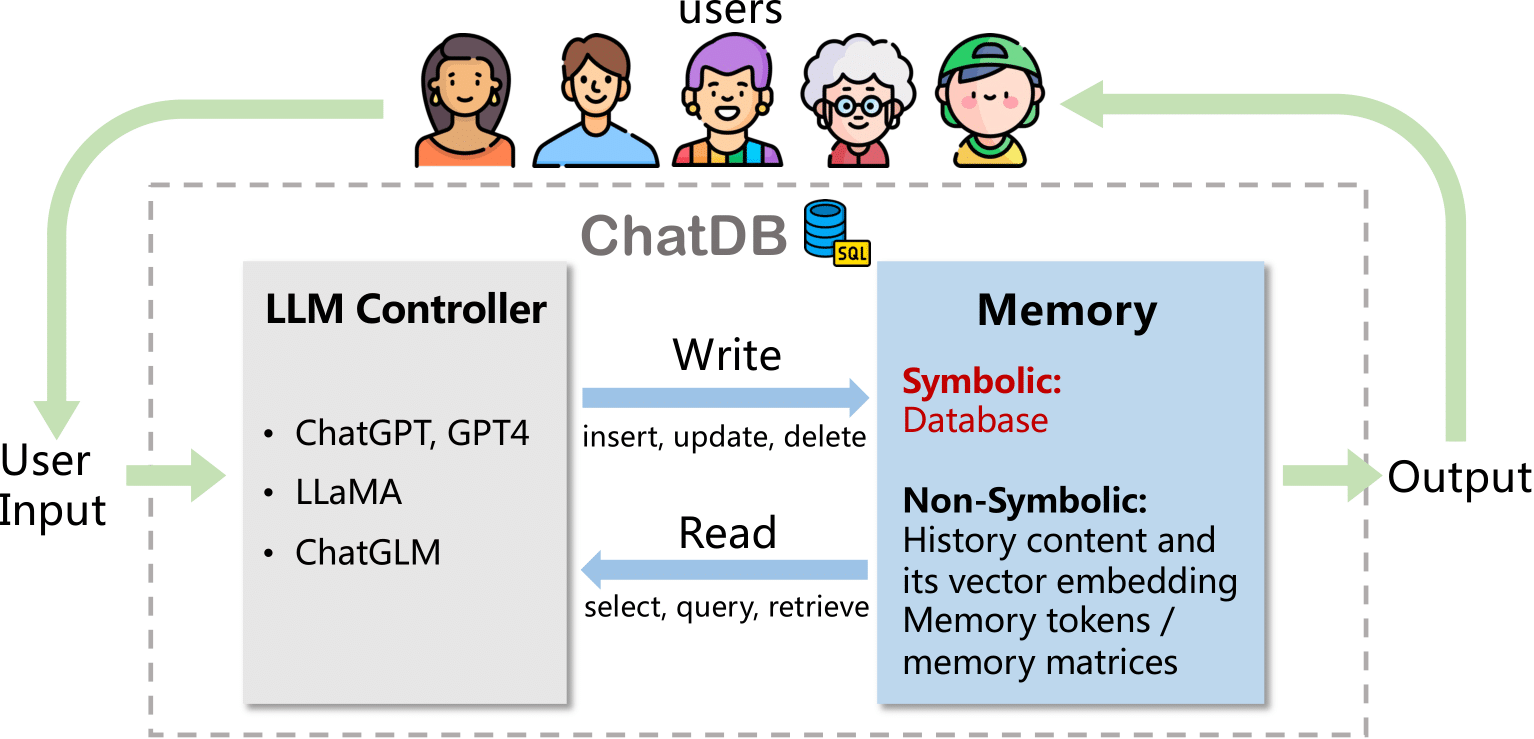

Here, we introduce ChatDB, a novel framework integrating symbolic memory with LLMs. ChatDB explores ways of augmenting LLMs with symbolic memory to handle contexts of arbitrary lengths. Such a symbolic memory framework is instantiated as an LLM with a set of SQL databases. The LLM generates SQL instructions to manipulate the SQL databases autonomously (including insertion, selection, update, and deletion), aiming to complete a complex task requiring multi-hop reasoning and long-term symbolic memory. This contrasts the existing involvement of databases, where databases are considered outside the whole learning system and passively store information instructed by humans. In addition, the previous work mainly focused on selection operations only, and did not support insertion, update, and deletion operations on the database.

In this section, we first describe the overall framework of our proposed ChatDB. Given a user input in natural language and optional schemas of existing tables in the database (not required if there are no existing tables), ChatDB aims to manipulate the symbolic memory (i.e., the external database) and perform multi-hop reasoning to respond to the user's input. Then, we delve into the details of the chain-of-memory, which is the crucial component of ChatDB.

ChatDB framework consists of three main stages: input processing, chain-of-memory, and response summary.

In the input processing stage, ChatDB generates a series of intermediate steps to manipulate the symbolic memory by utilizing LLMs if responding to the user input requires the use of memory. Otherwise, we use LLMs directly to generate a response.

In the chain-of-memory stage, ChatDB executes a series of intermediate steps to interact with external symbolic memory. ChatDB manipulates the external memory in sequence according to a series of previously generated SQL statements, including operations such as insert, update, select, delete, etc. The external database executes the corresponding SQL statements, updates the database, and returns the results. Afterward, ChatDB decides whether to update the next operation step based on the returned results and continues to execute the next step following the same procedure until all operations on the memory are completed.

In the response summary stage, ChatDB summarizes the final response to the user based on the results of a series of chain-of-memory steps.

The purpose of chain-of-memory is to enhance the reasoning capabilities and robustness of LLMs when manipulating symbolic memories. The approach involves converting the user's input into a sequence of intermediate memory operation steps, enabling LLMs to more accurately and effectively manipulate the memory in a symbolic way. After breaking down a complex memory operation into multiple simple steps, when executing the next step, it is necessary to integrate the results of the previous steps, determine whether the next step needs to be modified, and then perform the next step. Note that each step of SQL operation may involve single or multiple tables in the database.

The advantages of chain-of-memory are twofold. Firstly, it breaks down a complex memory operation into multiple simple intermediate steps, enabling LLMs to perform complex memory manipulations with higher accuracy, enhancing their multi-hop reasoning ability over symbolic memory. Secondly, by using a sequence of intermediate memory operations, chain-of-memory improves the robustness of LLMs when handling complex, multi-table manipulations. This approach enables ChatDB to handle edge cases and unexpected scenarios better, making it a promising method for complex and diverse real-world applications.

@misc{hu2023chatdb,

title={ChatDB: Augmenting LLMs with Databases as Their Symbolic Memory},

author={Chenxu Hu and Jie Fu and Chenzhuang Du and Simian Luo and Junbo Zhao and Hang Zhao},

year={2023},

eprint={2306.03901},

archivePrefix={arXiv},

primaryClass={cs.AI}

}